It’s hard for highly technical people to not dominate conversations about tech. But in a role of Engineering Manager it’s important to not do this, ownership should be with the people doing the work not their managers.

So how do you manage people with less experience than you and not become a dictator?

Something I’ve been working on with my teams lately is coming up with High level Guidelines to give them work with. Highlighting common pit falls and encouraging best practice that come from the experienced people in the organisation. Having a common understanding of what’s good or best help people move in the right direction while giving them the freedom to design and build as they like, as long as the guidelines are not too specific and leave room for interpretation that maybe be slightly with each team or engineers individual context.

For example, I would not give my teams a guideline of “Code Coverage >80%”, this is too specific, and based on a team’s application they are working on they maybe happy with 70 or even 60%, and that’s ok. A better way to phrase this if coverage is important to you would be “Team’s should value and have high test coverage”.

This again though is too specific, If you have poor assertions, it doesn’t matter what % coverage you have right? Code coverage has a higher purpose, and it alone does not serve this purpose, it’s better to focus on the higher level goals.

Code Coverage, for me, is a part of Test Automation, the goal of test automation is to reduce bugs, production issues etc. So these in my opinion are better to focus on. In my example below

Systems should have test automation that brings confidence and inspires courage in engineers

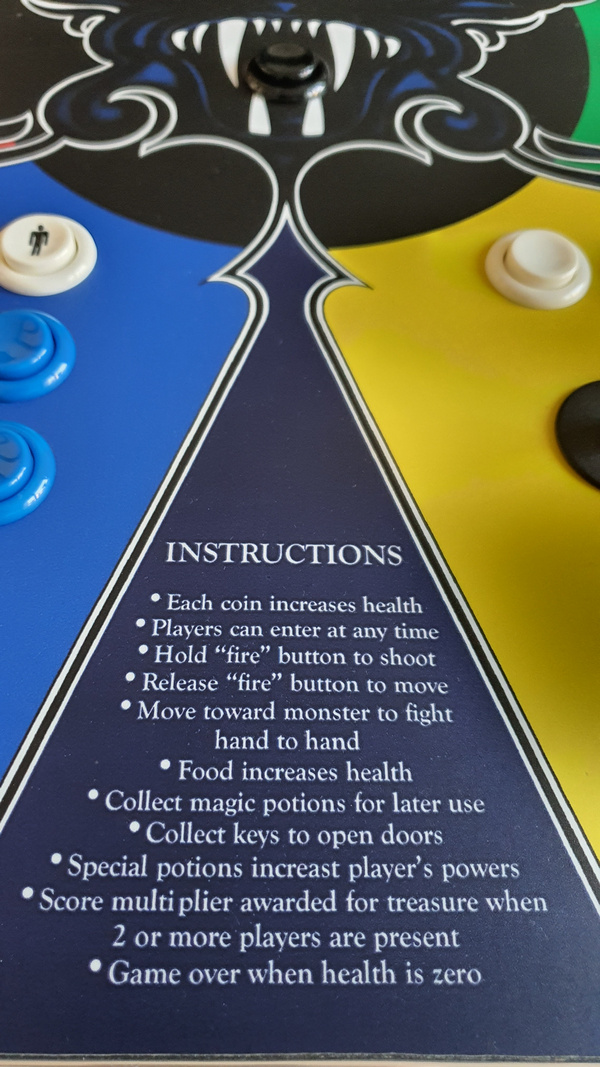

Where I mention test automation i mention the behaviour I have seen in high performing teams specifically. I’ve worked in teams where the “deploy” button is pressed with little regard for the impacts, because the Engineers are confident in the pipelines, monitoring and rollbacks that are in place. This for me is the high level goal i want my engineers to strive for, Real Continuous Delivery.

So here’s the full list I have in Draft, feel free to comment, I’ll do some follow up post with dives into some of them.

I used the word “Manifesto” because when i showed them to another manager it’s what he called it, I thought it was cool 🙂

Guiding principles for Systems

- Systems should be Domain Specific, responsible for one or few domains

- Systems should be small in the overwhelming majority of cases. Small systems limit complexity

- Systems should be consistent in design in the overwhelming majority of cases

- Systems should be easy to build

- Systems should have test automation that brings confidence and inspires courage in engineers

- Systems should be easy to contribute to, not require extensive training

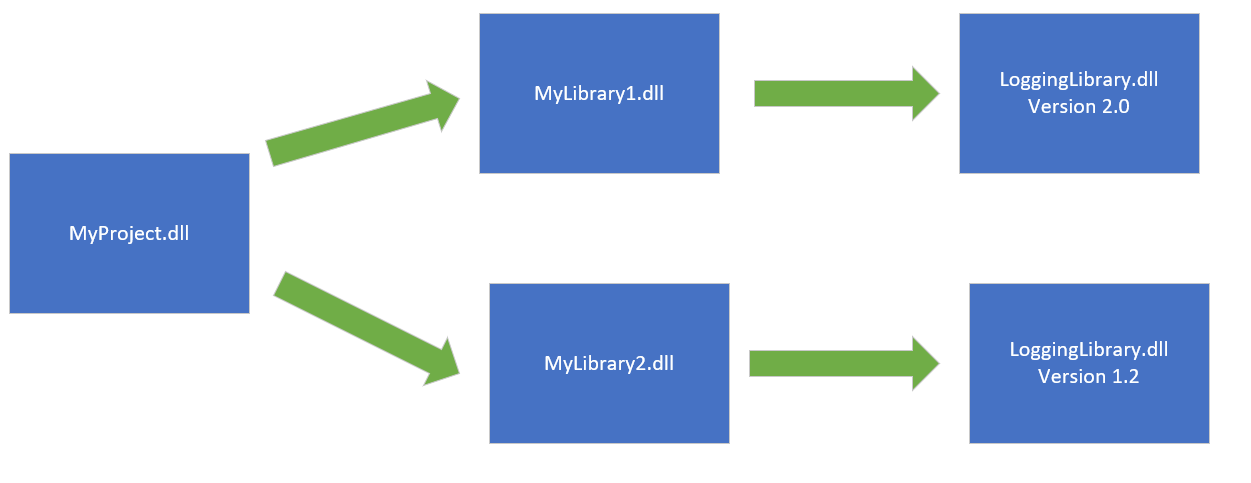

- Systems should have Cross Cutting concerns addressed and shared in an easy and consistent way

- Systems operate independently for the purpose of testing and debugging

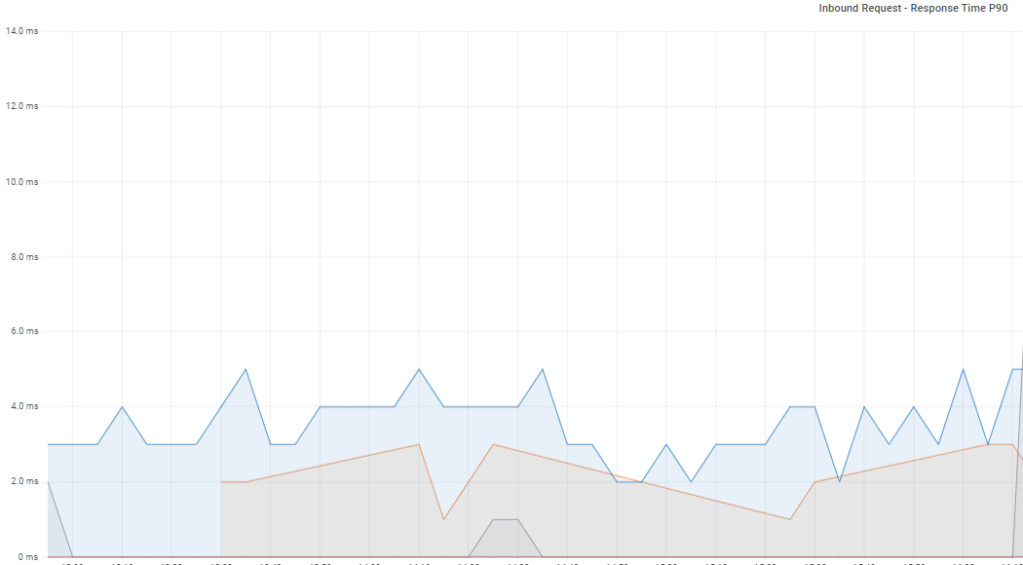

- Systems have consistent agreed upon telemetry for monitoring

- Telemetry is a solved cross cutting concern for non-domain specific metrics

- Systems are built on Modern up-to-date frameworks and platforms

- Systems use Continuous Integration as a principle not a tool, merge and deploy often and in small increments

- A System scales horizontally, both within a site and across multiple site. With this comes redundancy, Users experience zero downtime for instance and site outages

- Systems have owners, who are responsible for the long-term health of the systems and who have contributors as customers